Tree vs Forest¶

Lets See the Practical problems in Decision Tree -¶

Lets have a look at Decision Tree's Hyperparameters¶

from sklearn.tree import DecisionTreeClassifier,DecisionTreeRegressor

from sklearn.ensemble import RandomForestClassifier,RandomForestRegressor

Decision tree Paramaters and Attributes¶

dtr=DecisionTreeClassifier()

dtr.get_params

{'class_weight': None,

'criterion': 'gini',

'max_depth': None,

'max_features': None,

'max_leaf_nodes': None,

'min_impurity_decrease': 0.0,

'min_impurity_split': None,

'min_samples_leaf': 1,

'min_samples_split': 2,

'min_weight_fraction_leaf': 0.0,

'presort': False,

'random_state': None,

'splitter': 'best'}

DecisionTreeClassifier??

dir(dtr)

['__abstractmethods__', '__class__', '__delattr__', '__dict__', '__dir__', '__doc__', '__eq__', '__format__', '__ge__', '__getattribute__', '__getstate__', '__gt__', '__hash__', '__init__', '__init_subclass__', '__le__', '__lt__', '__module__', '__ne__', '__new__', '__reduce__', '__reduce_ex__', '__repr__', '__setattr__', '__setstate__', '__sizeof__', '__str__', '__subclasshook__', '__weakref__', '_abc_cache', '_abc_negative_cache', '_abc_negative_cache_version', '_abc_registry', '_estimator_type', '_get_param_names', '_validate_X_predict', 'apply', 'class_weight', 'criterion', 'decision_path', 'feature_importances_', 'fit', 'get_params', 'max_depth', 'max_features', 'max_leaf_nodes', 'min_impurity_decrease', 'min_impurity_split', 'min_samples_leaf', 'min_samples_split', 'min_weight_fraction_leaf', 'predict', 'predict_log_proba', 'predict_proba', 'presort', 'random_state', 'score', 'set_params', 'splitter']

Drawbacks of Descision Tree Based Modeling¶

- Prune to Overfiting !!

- They are unstable, meaning that a small change in the data can lead to a large change in the structure of the optimal decision tree.

- They are often relatively inaccurate. Many other predictors perform better with similar data. This can be remedied by replacing a single decision tree with a random forest of decision trees, but a random forest is not as easy to interpret as a single decision tree.

- For data including categorical variables with different number of levels, information gain in decision trees is biased in favor of those attributes with more levels

- Calculations can get very complex, particularly if many values are uncertain and/or if many outcomes are linked

What is a Good Decision ??¶

- Single Individual's decision won't be a good decision all the time (sometimes though)

- Good Decision and Bad Decision are again subjective (varies from person to person ,situation to situation)

- ## Atlast everything is all about statisfactionWhy Random Forest works well/better in practice !?¶

Physcological perspective :¶

- Find the solution for the particular problem based on knowledge /suggestion from many such individuals and decide accordingly

- Choose those individuals wisely

There was another Experiement Related to the Neuroscience¶

(easy to explain hard to write)Random Forest¶

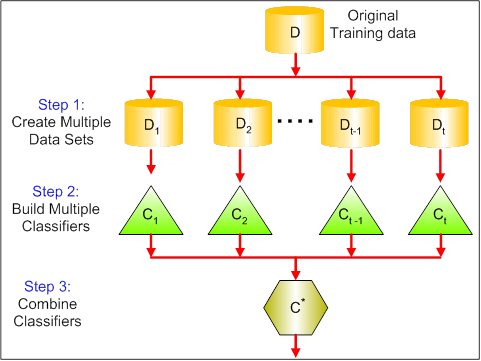

Ensemble (together):¶

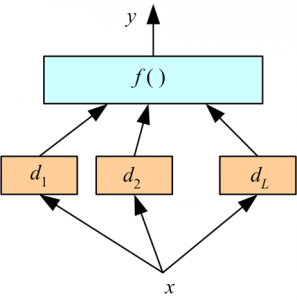

Ensemble is a collection of learners (base estimators ) which in turn builts the Strong Predictor or Learning strategy

You can combine any predictor or learning algorithms (not just trees) to achieve your end goal (high accuarcy )

(but consider Generilization Error) Types of Ensemble modeling¶

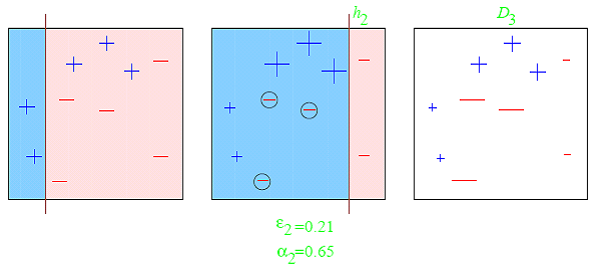

- Bagging

- Boosting

- Stacking

Bagging (Random Forest )¶

Boosting (Gradient Boosting)¶

Stacking (stacking two or three models)¶

Success of Ensemble Modeling¶

Success of your model really lies in modeling each individual tree as different as possible (uncorrelated )

How to achieve the possible differnce between the models ?¶

- Difference in population

- Difference in hypothesis

- Difference in modeling technique

- Difference in initial seed

Refrence :

Hyperparameters for Decision Tree and Random Forest¶

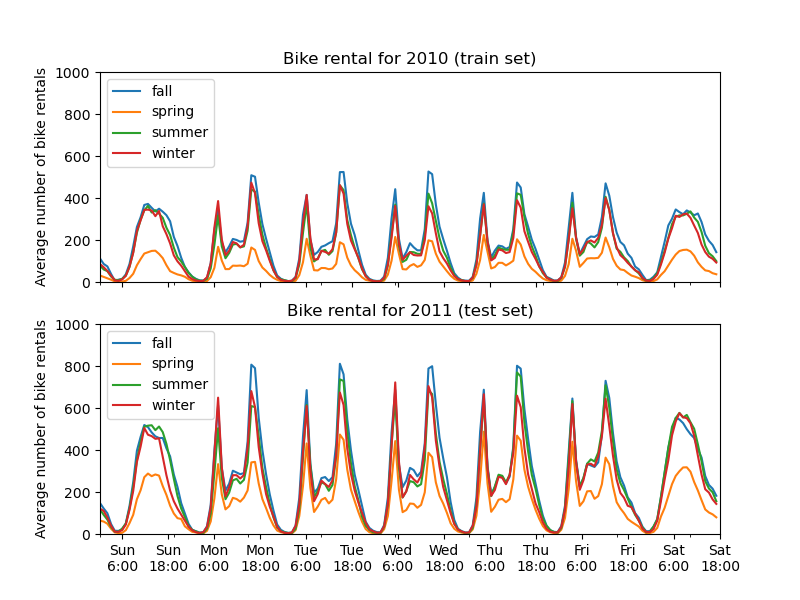

TIPS and Tricks to Use RandomForest for various things¶

from sklearn.datasets import load_boston,load_wine

import pandas as pd

from sklearn.model_selection import train_test_split

import matplotlib.pyplot as plt

from sklearn import metrics

import math

import numpy as np

from sklearn.ensemble import RandomForestClassifier,RandomForestRegressor

def rmse(x,y): return math.sqrt(((x-y)**2).mean())

def print_score(m):

res = [rmse(m.predict(X_train), y_train), rmse(m.predict(X_test), y_test),

m.score(X_train, y_train), m.score(X_test, y_test)]

if hasattr(m, 'oob_score_'): res.append(m.oob_score_)

print(res)

house_price=load_boston(return_X_y=False)

#house_price['data']

#house_price['feature_names']

#house_price['target']

X_df=pd.DataFrame(data=house_price['data'],columns=house_price['feature_names'])

y=house_price['target']

X_df.head(10)

| CRIM | ZN | INDUS | CHAS | NOX | RM | AGE | DIS | RAD | TAX | PTRATIO | B | LSTAT | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0.00632 | 18.0 | 2.31 | 0.0 | 0.538 | 6.575 | 65.2 | 4.0900 | 1.0 | 296.0 | 15.3 | 396.90 | 4.98 |

| 1 | 0.02731 | 0.0 | 7.07 | 0.0 | 0.469 | 6.421 | 78.9 | 4.9671 | 2.0 | 242.0 | 17.8 | 396.90 | 9.14 |

| 2 | 0.02729 | 0.0 | 7.07 | 0.0 | 0.469 | 7.185 | 61.1 | 4.9671 | 2.0 | 242.0 | 17.8 | 392.83 | 4.03 |

| 3 | 0.03237 | 0.0 | 2.18 | 0.0 | 0.458 | 6.998 | 45.8 | 6.0622 | 3.0 | 222.0 | 18.7 | 394.63 | 2.94 |

| 4 | 0.06905 | 0.0 | 2.18 | 0.0 | 0.458 | 7.147 | 54.2 | 6.0622 | 3.0 | 222.0 | 18.7 | 396.90 | 5.33 |

| 5 | 0.02985 | 0.0 | 2.18 | 0.0 | 0.458 | 6.430 | 58.7 | 6.0622 | 3.0 | 222.0 | 18.7 | 394.12 | 5.21 |

| 6 | 0.08829 | 12.5 | 7.87 | 0.0 | 0.524 | 6.012 | 66.6 | 5.5605 | 5.0 | 311.0 | 15.2 | 395.60 | 12.43 |

| 7 | 0.14455 | 12.5 | 7.87 | 0.0 | 0.524 | 6.172 | 96.1 | 5.9505 | 5.0 | 311.0 | 15.2 | 396.90 | 19.15 |

| 8 | 0.21124 | 12.5 | 7.87 | 0.0 | 0.524 | 5.631 | 100.0 | 6.0821 | 5.0 | 311.0 | 15.2 | 386.63 | 29.93 |

| 9 | 0.17004 | 12.5 | 7.87 | 0.0 | 0.524 | 6.004 | 85.9 | 6.5921 | 5.0 | 311.0 | 15.2 | 386.71 | 17.10 |

plt.plot(y)

plt.show()

m.estimators_

[DecisionTreeRegressor(criterion='mse', max_depth=None, max_features='auto',

max_leaf_nodes=None, min_impurity_decrease=0.0,

min_impurity_split=None, min_samples_leaf=1,

min_samples_split=2, min_weight_fraction_leaf=0.0,

presort=False, random_state=441029088, splitter='best'),

DecisionTreeRegressor(criterion='mse', max_depth=None, max_features='auto',

max_leaf_nodes=None, min_impurity_decrease=0.0,

min_impurity_split=None, min_samples_leaf=1,

min_samples_split=2, min_weight_fraction_leaf=0.0,

presort=False, random_state=949653668, splitter='best'),

DecisionTreeRegressor(criterion='mse', max_depth=None, max_features='auto',

max_leaf_nodes=None, min_impurity_decrease=0.0,

min_impurity_split=None, min_samples_leaf=1,

min_samples_split=2, min_weight_fraction_leaf=0.0,

presort=False, random_state=1603443583, splitter='best'),

DecisionTreeRegressor(criterion='mse', max_depth=None, max_features='auto',

max_leaf_nodes=None, min_impurity_decrease=0.0,

min_impurity_split=None, min_samples_leaf=1,

min_samples_split=2, min_weight_fraction_leaf=0.0,

presort=False, random_state=654777100, splitter='best'),

DecisionTreeRegressor(criterion='mse', max_depth=None, max_features='auto',

max_leaf_nodes=None, min_impurity_decrease=0.0,

min_impurity_split=None, min_samples_leaf=1,

min_samples_split=2, min_weight_fraction_leaf=0.0,

presort=False, random_state=1950690762, splitter='best'),

DecisionTreeRegressor(criterion='mse', max_depth=None, max_features='auto',

max_leaf_nodes=None, min_impurity_decrease=0.0,

min_impurity_split=None, min_samples_leaf=1,

min_samples_split=2, min_weight_fraction_leaf=0.0,

presort=False, random_state=978385060, splitter='best'),

DecisionTreeRegressor(criterion='mse', max_depth=None, max_features='auto',

max_leaf_nodes=None, min_impurity_decrease=0.0,

min_impurity_split=None, min_samples_leaf=1,

min_samples_split=2, min_weight_fraction_leaf=0.0,

presort=False, random_state=1116456425, splitter='best'),

DecisionTreeRegressor(criterion='mse', max_depth=None, max_features='auto',

max_leaf_nodes=None, min_impurity_decrease=0.0,

min_impurity_split=None, min_samples_leaf=1,

min_samples_split=2, min_weight_fraction_leaf=0.0,

presort=False, random_state=1626040669, splitter='best'),

DecisionTreeRegressor(criterion='mse', max_depth=None, max_features='auto',

max_leaf_nodes=None, min_impurity_decrease=0.0,

min_impurity_split=None, min_samples_leaf=1,

min_samples_split=2, min_weight_fraction_leaf=0.0,

presort=False, random_state=913584784, splitter='best'),

DecisionTreeRegressor(criterion='mse', max_depth=None, max_features='auto',

max_leaf_nodes=None, min_impurity_decrease=0.0,

min_impurity_split=None, min_samples_leaf=1,

min_samples_split=2, min_weight_fraction_leaf=0.0,

presort=False, random_state=1395632413, splitter='best')]

preds = np.stack([t.predict(X_test) for t in m.estimators_])

preds[:,0], np.mean(preds[:,0]), y_test[0]

(array([35.4, 28.2, 36.2, 36.2, 35.1, 34.9, 33.4, 36.2, 31.1, 29. ]), 33.57000000000001, 37.2)

preds.shape

pred=m.predict(X_test)

interval=+np.std(preds)

index=list(range(len(pred)))

Getting Confidence interval¶

plt.figure()

plt.plot(index,pred)

#plt.plot(dataframe.index,dataframe[col_name],label='original')

#plt.plot(dataframe.index,predicted_gb,label='predicted gradient descent')

#plt.plot(dataframe.index,upper,label='upper_boundary')

#plt.plot(dataframe.index,lower,label='lower_boundary')

lower=pred-np.std(preds)

upper=pred+np.std(preds)

plt.xticks(rotation=80)

plt.xlabel('index')

plt.ylabel('pred')

plt.fill_between(index,lower,upper,alpha=0.4)

plt.show()

plt.plot(pred+np.std(preds))

plt.show()

list(zip(house_price['feature_names'],m.feature_importances_))

[('CRIM', 0.034234063847467536),

('ZN', 0.0008018637608521572),

('INDUS', 0.005177373717161881),

('CHAS', 0.000573198330816457),

('NOX', 0.015801871180649373),

('RM', 0.5173242657402186),

('AGE', 0.012546125661862906),

('DIS', 0.04733835047203008),

('RAD', 0.004016786209384228),

('TAX', 0.013947519900838706),

('PTRATIO', 0.015242455205511887),

('B', 0.011313045816218914),

('LSTAT', 0.32168308015698727)]

plt.plot([metrics.r2_score(y_test, np.mean(preds[:i+1], axis=0)) for i in range(10)])

plt.xlabel('No of estimators')

plt.ylabel('r2 score')

plt.show()

m = RandomForestRegressor(n_estimators=20, n_jobs=-1)

m.fit(X_train, y_train)

print_score(m)

[1.4202560483039897, 3.40112608725659, 0.9745201413029686, 0.8819658389066994]

m = RandomForestRegressor(n_estimators=40, n_jobs=-1)

m.fit(X_train, y_train)

print_score(m)

[1.371449205097873, 2.874513798326886, 0.9772411391697727, 0.9074017302211023]

m = RandomForestRegressor(n_estimators=80, n_jobs=-1)

m.fit(X_train, y_train)

print_score(m)

[1.3701758124748178, 2.93752818925995, 0.9772833828211569, 0.9032973974763153]

Hierarchical Clustering¶

import scipy

from scipy.cluster import hierarchy as hc

corr = np.round(scipy.stats.spearmanr(X_df).correlation, 4)

corr_condensed = hc.distance.squareform(1-corr)

z = hc.linkage(corr_condensed, method='average')

fig = plt.figure(figsize=(16,10))

dendrogram = hc.dendrogram(z, labels=X_df.columns, orientation='left', leaf_font_size=16)

plt.show()

!pip install pdpbox

Collecting pdpbox Downloading https://files.pythonhosted.org/packages/87/23/ac7da5ba1c6c03a87c412e7e7b6e91a10d6ecf4474906c3e736f93940d49/PDPbox-0.2.0.tar.gz (57.7MB) Requirement already satisfied: pandas in c:\users\gurunath.lv\appdata\local\continuum\anaconda3\lib\site-packages (from pdpbox) (0.20.3) Requirement already satisfied: numpy in c:\users\gurunath.lv\appdata\local\continuum\anaconda3\lib\site-packages (from pdpbox) (1.15.1) Requirement already satisfied: scipy in c:\users\gurunath.lv\appdata\local\continuum\anaconda3\lib\site-packages (from pdpbox) (1.1.0) Requirement already satisfied: matplotlib>=2.1.2 in c:\users\gurunath.lv\appdata\local\continuum\anaconda3\lib\site-packages (from pdpbox) (2.2.2) Requirement already satisfied: joblib in c:\users\gurunath.lv\appdata\local\continuum\anaconda3\lib\site-packages (from pdpbox) (0.11) Requirement already satisfied: psutil in c:\users\gurunath.lv\appdata\local\continuum\anaconda3\lib\site-packages (from pdpbox) (5.0.1) Requirement already satisfied: scikit-learn in c:\users\gurunath.lv\appdata\local\continuum\anaconda3\lib\site-packages (from pdpbox) (0.19.2) Requirement already satisfied: python-dateutil>=2 in c:\users\gurunath.lv\appdata\local\continuum\anaconda3\lib\site-packages (from pandas->pdpbox) (2.7.3) Requirement already satisfied: pytz>=2011k in c:\users\gurunath.lv\appdata\local\continuum\anaconda3\lib\site-packages (from pandas->pdpbox) (2017.3) Requirement already satisfied: cycler>=0.10 in c:\users\gurunath.lv\appdata\local\continuum\anaconda3\lib\site-packages (from matplotlib>=2.1.2->pdpbox) (0.10.0) Requirement already satisfied: pyparsing!=2.0.4,!=2.1.2,!=2.1.6,>=2.0.1 in c:\users\gurunath.lv\appdata\local\continuum\anaconda3\lib\site-packages (from matplotlib>=2.1.2->pdpbox) (2.2.0) Requirement already satisfied: six>=1.10 in c:\users\gurunath.lv\appdata\local\continuum\anaconda3\lib\site-packages (from matplotlib>=2.1.2->pdpbox) (1.11.0) Requirement already satisfied: kiwisolver>=1.0.1 in c:\users\gurunath.lv\appdata\local\continuum\anaconda3\lib\site-packages (from matplotlib>=2.1.2->pdpbox) (1.0.1) Requirement already satisfied: setuptools in c:\users\gurunath.lv\appdata\local\continuum\anaconda3\lib\site-packages (from kiwisolver>=1.0.1->matplotlib>=2.1.2->pdpbox) (40.0.0) Building wheels for collected packages: pdpbox Running setup.py bdist_wheel for pdpbox: started Running setup.py bdist_wheel for pdpbox: finished with status 'done' Stored in directory: C:\Users\gurunath.lv\AppData\Local\pip\Cache\wheels\7d\08\51\63fd122b04a2c87d780464eeffb94867c75bd96a64d500a3fe Successfully built pdpbox Installing collected packages: pdpbox Successfully installed pdpbox-0.2.0

rf=RandomForestRegressor()

from sklearn.linear_model import LinearRegression

rf.base_estimator=LinearRegression

rf.n_estimators

10

pdpbox¶

When using black box machine learning algorithms like random forest and boosting, it is hard to understand the relations between predictors and model outcome.

For example, in terms of random forest, all we get is the feature importance. Although we can know which feature is significantly influencing the outcome based on the importance calculation, it really sucks that we don’t know in which direction it is influencing. And in most of the real cases, the effect is non-monotonic.

We need some powerful tools to help understanding the complex relations between predictors and model prediction. Refer :https://github.com/SauceCat/PDPbox/tree/master/tutorials

Highlight¶

- Helper functions for visualizing target distribution as well as prediction distribution.

- Proper way to handle one-hot encoding features.

- Solution for handling complex mutual dependency among features.

- Support multi-class classifier.

- Support two variable interaction partial dependence plot.

Everything is right ,but why cover this in Tree based tutorials¶

Ans :- PDP depends on Tree based models to interpret the feature importance¶

from above Sklearn documentation¶

from pdpbox import pdp

#from plotnine import *

X_df.nunique()

CRIM 504 ZN 26 INDUS 76 CHAS 2 NOX 81 RM 446 AGE 356 DIS 412 RAD 9 TAX 66 PTRATIO 46 B 357 LSTAT 455 dtype: int64

pdp.pdp_isolate?

X_train.shape,X_df.shape

((379, 13), (506, 13))

def plot_pdp(feat, clusters=None, feat_name=None):

feat_name = feat_name or feat

p = pdp.pdp_isolate(model=m, dataset=X_df, feature=feat,model_features=feats)

return pdp.pdp_plot(p, feat_name, plot_lines=True,

cluster=clusters is not None, n_cluster_centers=clusters)

print('RAD')

plot_pdp('RAD')

plt.show()

RAD

print('CHAS')

plot_pdp('CHAS',clusters=5)

plt.show()

CHAS

print('RAD')

plot_pdp('RAD',clusters=5)

plt.show()

RAD

feats = house_price['feature_names']

feats

array(['CRIM', 'ZN', 'INDUS', 'CHAS', 'NOX', 'RM', 'AGE', 'DIS', 'RAD',

'TAX', 'PTRATIO', 'B', 'LSTAT'], dtype='<U7')

feats = house_price['feature_names']

print(len(feats))

p = pdp.pdp_interact(m, X_df, feats,features=house_price['feature_names'])

pdp.pdp_interact_plot(p, feats)

plt.show()

13

y_test.max(),y_test.min(),np.unique(y_test,return_counts=True)

(50.0,

8.4,

(array([ 8.4, 8.5, 8.7, 10.4, 10.5, 11.5, 11.7, 11.8, 11.9, 12. , 12.3,

13.1, 13.3, 13.4, 13.8, 13.9, 14.1, 14.5, 15. , 15.4, 15.6, 16.2,

16.3, 16.4, 16.6, 16.7, 16.8, 17.3, 17.4, 17.5, 17.8, 18.1, 18.4,

18.5, 18.6, 18.9, 19. , 19.2, 19.3, 19.4, 19.5, 19.6, 19.7, 19.8,

19.9, 20.1, 20.3, 20.4, 20.5, 20.6, 21. , 21.2, 21.4, 21.7, 21.9,

22. , 22.9, 23. , 23.1, 23.3, 23.6, 23.7, 23.8, 23.9, 24.5, 24.8,

25. , 25.1, 26.2, 26.6, 26.7, 27.1, 27.9, 28.4, 28.5, 28.7, 29. ,

29.1, 29.4, 29.6, 29.8, 29.9, 31.6, 31.7, 32. , 32.5, 32.7, 32.9,

33.2, 34.6, 34.7, 36. , 36.1, 37.2, 37.9, 39.8, 42.3, 44.8, 48.5,

48.8, 50. ]),

array([1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 2, 1, 1, 3, 1, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 1, 2, 1, 1, 1, 1, 1, 1, 1, 1, 1, 2, 2, 1, 2, 1, 2,

1, 2, 3, 1, 1, 1, 1, 1, 1, 3, 1, 1, 2, 2, 3, 1, 1, 1, 1, 3, 1, 1,

1, 1, 1, 1, 1, 1, 1, 1, 1, 2, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,

2, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 6], dtype=int64)))

RandomForestClassifier??

Hyperparamaters :¶

* n_estimators

* OOb score

* Min samples_leaf

* max_featuresn_estimators :¶

Sklearn acronym for Decision Tree ,specify the Number of Decision trees to use ,to built the Randomforest

OOB Score :¶

Out of Bag Score :which is the validation score obtained from different Decision Trees(Estimators)when you are

building the tree (used for validation no effect during training)

Min samples_leaf¶

Minimum Number of samples to be accepted as leaf

Max features (try to guess this):¶

I assumed this one as enterily wrong this many days ,Yesterday i got wisdom ,about what max features really is !?

- The number of features to consider when looking for the best split

- http://scikit-learn.org/stable/auto_examples/ensemble/plot_ensemble_oob.html

All the other paramaters are likely a variant of this parameters ,(sklearn documentation gives good clarity to differentaite between these parameters)¶

References¶

Physcological perspective :¶

https://www.nationalgeographic.com/science/phenomena/2013/01/31/the-real-wisdom-of-the-crowds/

Model Perspective:¶

http://dataaspirant.com/2017/05/22/random-forest-algorithm-machine-learing/

https://towardsdatascience.com/the-random-forest-algorithm-d457d499ffcd

https://link.springer.com/content/pdf/10.1023%2FA%3A1010933404324.pdf